Dr. Barry Newell is a Visiting Research Fellow at the United Nations University International Institute for Global Health in Kuala Lumpur, Malaysia, and an Honorary Associate Professor (Research School of Engineering) and a Visiting Fellow (Fenner School of Environment & Society) at the Australian National University. He has also held research and teaching positions at Yale University and Kitt Peak National Observatory (Arizona). A physicist who focuses on the dynamics of social-ecological systems, he has particular interest in the critical importance of shared language in trans-disciplinary investigations of system behaviour. His work involves developing practical ways for groups to use systems thinking and focused dialogue to improve their decisions and policy making. Developing Collaborative Conceptual Modelling (CCM), a high-level approach which provides conceptual guidance for trans-disciplinary research and cross-sector management, is one aspect of this work.

Systems Thinking and the Cobra Effect

A famous anecdote describes a scheme the British Colonial Government implemented in India in an attempt to control the population of venomous cobras that were plaguing the citizens of Delhi that offered a bounty to be paid for every dead cobra brought to the administration officials. The policy initially appeared successful, intrepid snake catchers claiming their bounties and fewer cobras being seen in the city. Yet, instead of tapering off over time, there was a steady increase in the number of dead cobras being presented for bounty payment each month. Nobody knew why.

Right from birth, we start to see connections in the world around us. A meal cures hunger; sleep relieves tiredness; problems have causes, and eliminating the cause will yield a solution. What we are doing (whether we know it or not) is forming mental models of the way that cause and effect are related. Our mental models exert an incredibly powerful influence on our perceptions and thoughts. They determine what we see, tell us what events are important, help us to make sense of our experiences, and provide convenient cognitive shortcuts to speed our thinking.

However, they can lead us astray. Most of our cause–effect experiences involve very simple, direct relationships. As a result, we tend to think in terms of ‘linear’ behaviour — double the cause to double the effect, halve the cause to halve the effect. In reality, as we will see with the cobras of Delhi, the world is often more complex than we realise.

Counter-intuitive behaviour

We live in a highly connected world where management actions have multiple outcomes. When action is taken, the intended outcome might occur, but a number of unexpected outcomes will always occur. While an unexpected outcome can be beneficial, such serendipitous events are extremely rare. What is more likely is that the unexpected outcomes will be unwanted — these outcomes can be thought of as counter-intuitive ‘policy surprises’. If we want to avoid unwanted policy surprise, then we need to improve our intuitions concerning the operation of cause and effect in complex social-ecological systems.

Developing methods to help us visualise and understand cause-and-effect relations in complex systems is of great importance. We need ways to progress beyond linear thinking. In particular, we need to understand the concept of feedback and appreciate the dominant role it plays in determining system responses to management initiatives.

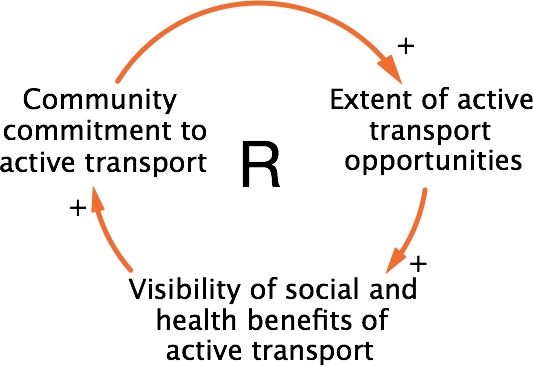

Reinforcing and balancing feedback

There are just two forms of basic feedback loop: reinforcing and balancing. Figure 1 provides an example of ‘reinforcing feedback’ (also called ‘positive feedback’). This diagram expresses the hypothesis that an increase in the availability of active-transport opportunities will increase the extent to which community members ‘see’ the benefits of active transport. An increase in the visibility of benefits leads to increased community commitment to active transport, which leads to a further increase in the availability of opportunities for active transport. Similarly, if the level of any of the variables in this reinforcing loop is decreased, this change will propagate around the loop to decrease that level further. This amplifying effect, which can drive accelerating growth or collapse of the active transport system, can be triggered by an increase or decrease in any of the variables in the loop.

Figure 1. A Reinforcing Feedback Loop. In this influence diagram the blocks of text represent system variables and the arrows represent the processes or mechanisms by which a change in the level of one variable affects the level of another variable. A plus sign on an arrow indicates that a change in the variable at the tail of the arrow will cause the variable at the head of the arrow to eventually change in the same direction. The upper-case R in the centre of the diagram indicates that this is a reinforcing loop which generates feedback forces that act to amplify change.

The other basic cause-effect loop is ‘balancing feedback’. Balancing feedback (also called ‘negative feedback’) is ‘goal seeking’. It is the basis for artificial and natural control systems and system resilience. Figure 2 shows a case where the goal is to maintain a sustainable level of resource consumption — high enough to meet the community’s needs, but low enough to ensure the long-term viability of the resource. If, for example, the actual level of resource consumption rises above the sustainable level, then action is taken to reverse the change. The feedback loop works to minimise the difference between the two levels — ideally the difference will be maintained close to zero.

Figure 2. A Balancing Feedback Loop (B), which generates feedback forces that act to oppose change. A minus sign on an arrow indicates that a change in the variable at the tail of the arrow will cause the variable at the head of the arrow to eventually change in the opposite direction. The signs shown in this diagram are appropriate for the case where actual consumption exceeds the sustainable level.

In a complex, real-world system there will be multiple reinforcing and balancing feedback loops, interacting with each other. Despite this, most of us still think in terms of simple causal chains, and immediate, linear effects. In particular, we tend to overlook feedback between decisions that are made by management groups that are operating in isolation from one another. This cross-sector feedback is largely invisible because there is little communication between the managers in the different ‘silos’. To make matters worse, major feedback effects are often delayed (sometimes by decades or longer!), or occur at locations distant from the triggering actions making them hard to detect or attribute.

A decision maker who seeks to anticipate the way that a complex system will respond to a planned management initiative faces a ‘complexity dilemma’. The behaviour of a complex system emerges from the feedback interactions between its parts. This means that, on the one hand, its behaviour cannot be understood from a study of the parts taken one by one in isolation — the system needs to be looked at as a whole. On the other hand, such systems turn out to be far too complex to be studied as a whole. When faced with this dilemma, time-poor decision makers tend to do one of two things. Either they study a small part of the system in isolation, thus ruling out their chances of seeing significant cross-sector feedback effects, or they simply abandon their attempt to be systemic. Either way they fail to avoid future policy surprise.

But there is a third possibility. Managers sometimes seek to develop working dynamical models that can help them to anticipate possible unwanted outcomes of proposed actions. The idea that one can have a model to run in advance of reality is very attractive. Nevertheless, the construction of useful detailed models is a difficult undertaking that requires significant amounts of reliable time-series data and the participation of expert data analysts and modellers. In many situations these conditions cannot be met.

Simple dynamical models

There is, however, a type of modelling that can improve decision making in almost all situations. Efforts to build extensive working models of complex business systems have led to the discovery of simple feedback structures that occur over and over again in many different contexts. These ‘system archetypes’ have characteristic behaviours that can provide feedback-based explanations of behavioural patterns that are commonly observed in human–environment systems and are relevant in real-world policy-making and management contexts.

Take, for example, a Fixes that Fail feedback structure (Figure 3). This archetype captures the common tendency of decision makers, when faced with a problem, to apply a ‘fix’ that reduces the strength of the problem. While it seems to work in the first instance, the fix fails in the long run because it has an unexpected outcome that amplifies the problem.

Figure 3. Freeway Feedback. This influence diagram shows a specific example of the generic Fixes That Fail system archetype. The short parallel lines drawn across two of the arrows represent delayed effects.

The dynamical story told in Figure 3 concerns the development of freeways as a means to reduce traffic congestion. As existing roads become congested, so too commuting times increase. Commuting times in excess of the desired time frustrate the community and eventually lead to corrective action — the ‘fix’. In this case, the fix involves constructing new freeways. As soon as a new freeway is opened to traffic there is an immediate reduction in commuting times, and everyone is happy. Then, with a delay, the existence of the freeway (with its short commuting times) encourages people to move out to the peri-urban areas at the end of the freeway. So, the new freeway triggers urban sprawl. More people, more cars, and more congestion. Once again commuting times become a problem, even on the freeway. What to do? Well, last time we had this problem we built a new freeway…

System archetypes are, of course, not models of complex systems, and must therefore be used with an understanding of their limitations. Their discovery does, however, suggest that simple dynamical models have the potential to improve policymaking and management decisions in complex situations. Experience with such models can alert decision makers at all levels to the potential for feedback forces to cause policy surprise. For example, it would be a significant step forward if all decision makers recognised the potential for balancing feedback structures to cause the well-known phenomenon of ‘policy resistance’, where people push on a system and the system pushes right back to resist their efforts. This, after all, is the essence of resilience.

Given the increasing complexity of human–environment systems in the 21st century, the need for practical ways to promote and use feedback thinking has never been greater.

Simple dynamical models must be used with caution. They can never tell the whole story. Their main value lies in their potential to make concrete the message that we must take into account feedback effects in the policy-design processes for complex systems. It is not necessary for all policymakers to become competent system modellers, but it is necessary for them to understand that feedback effects must be considered. Given the increasing complexity of human–environment systems in the 21st century, the need for practical ways to promote and use feedback thinking has never been greater.

UNU is using this approach to help stakeholders map out opportunities and barriers to in the field of urban health, which is especially amenable to such techniques given the multi-faceted and interconnected nature of health outcomes in cities. By having different stakeholders create their own diagrams, they can be merged and simplified allowing everyone to see how their own understanding of the system relates to everyone else’s, thus strengthening communication and broadening their outlook. They also serve as an educational tool by which people with very different academic trainings and understandings of a problem can come together speaking a common language.

With this understanding in mind, policymakers can at least be aware of potential unintended consequences along the way and maybe some areas where synergies can be found. The systems analysis approach is also explicitly identified as the key methodology for a new ICSU 10-year research program on Health and Well-being in the Changing Urban Environment co-sponsored by UNU and the Interacademy Medical Panel (IAMP) hosted by the Chinese Academy of Sciences in Xiamen.

The cobra effect

By now, you may have figured out what happened in the Delhi anecdote with which we opened. Realising that the cobra bounty converted the snakes into valuable commodities, entrepreneurial citizens started actively breeding them (a similar and well-documented event happened with the French who tried to eradicate rats from Hanoi).

Under the new policy, cobras provided a rather stable source of income. In addition, it was much easier to kill captive cobras than to hunt them in the city. So the snake catchers increasingly abandoned their search for wild cobras, and concentrated on their breeding programs. In time, the government became puzzled by the discrepancy between the number of cobras seen around the city and the number of dead cobras being redeemed for bounty payments. They discovered the clandestine breeding sites, and so abandoned the bounty policy. As a final act the breeders, now stuck with nests of worthless cobras, simply released them into the city, making the problem even worse than before!

The lesson is that simplistic policies can come back to bite you. The next time you hear a politician proclaiming a simple fix to a complex problem, check for the feedback cobras lurking in the bushes!

Systems Thinking and the Cobra Effect by Barry Newell and Christopher Doll is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.